Databricks Interview Questions to help you land the Job

Discover the top Databricks interview questions and expert tips for acing your next job interview. Prepare yourself with our comprehensive guide to get hired!

If you're curious, a quick thinker, and excited about working on some of the world's most challenging Big Data problems, Databricks could be an excellent fit. They are one of the biggest big data companies (excuse the pun...) and are always looking for the best talent to join their teams.

Getting a job at Databricks requires completing several rounds of technical and soft-skill interviews. And for those ill-prepared, the process is tough.

In this article, you'll find 11 of the most commonly asked Databricks interview questions with the type of responses they'll be looking for. Plus, the interview process and preparation tips. Let’s get into it.

What is Azure Databricks?

Databricks is a powerful Apache Spark-based data analytics platform optimized for Microsoft Azure cloud services. It's easy to deploy and use in minutes for data analysis, data management, and machine learning.

Databricks is currently being used by over 7,000 organizations worldwide and it's primary functions include facilitating large-scale data engineering, collaborative data science, full-lifecycle machine learning, and business analytics.

Databricks is headquartered in San Francisco but has offices and partnerships globally.

Why Does Databrick Ask Specific Questions?

Databrick interview questions are designed to successfully assess your code base management skills and evaluate your Databricks coding experience. Your responsibilities will include using Apache Spark to develop a highly effective data ingestion pipeline. Therefore, the questions are specifically structured to analyze your technical skills and personal traits.

Like in any interview, you can showcase how well your qualifications and skills suit the role.

How Hard is The Databrick Interview?

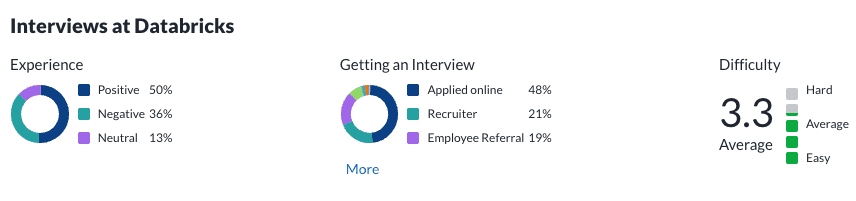

According to Glassdoor.) figures, users rated their Databricks interview experience as 54% and a difficulty score of 3.29 out of 5 where 5 is the highest difficulty level.

Databrick Interview Process

The Databrick engineering interview process is designed to encourage collaboration and conversation. It consists of a phone screen, where you'll be contacted for a basic screening of technical skills and personal traits.

You'll then be invited on-site for three rounds of interviews comprising technical and soft skill assessments lasting between 45 and 90 minutes.

1. Behavioral Round

During the behavioral round, you'll be presented with possible scenarios and asked how you dealt with a similar situation. Feel free to discuss how you responded to a challenging project, resolved a disagreement with a colleague, or something similar.

Behavioral-based questions are best answered using the STAR method. This structured response technique helps the hiring manager decide how your past behavior and experiences may help you adapt and work on various projects.

The STAR method stands for:

- Situation: discuss an applicable story about a past job, home, or school situation that needed a solution.

- Task: discuss the tasks required to resolve the problem and the result.

- Action: describe what role(s) you played in addressing the problem.

- Result: then talk about the consequences of your efforts, the challenges, and what you learned. For a great answer, explain how your actions benefited the organization or situation.

In addition to considering how well you've answered the question, your potential employers will look at whether you were confident at telling the story and if you were honest in your failures.

2. Technical Rounds

Code Challenge Assignment

The code challenge will be hands-on coding assessments to evaluate your problem-solving skills and abilities. You'll be expected to complete the challenge during the interview and can use a laptop to work through algorithm problems.

Coding Questions

The coding questions will focus more on code structure, design, debugging technical algorithms related to the data structure, and learning new domains. Some technical questions will probably use a language or framework you're unfamiliar with. This assesses your ability to read docs to solve a problem in a new area.

Interviews are customized based on a candidate's work experience and background. For full-stack type roles, the interview will focus more on web communication, e.g., HTTP, authentication, and Websockets. And for more low-level system engineering roles, the focus will be on areas like OS primitives and multi-threading.

Would you like a 4 day work week?

Other Code-Related Interview Topics

Here are some of the other concepts and topics likely to pop up during your Databricks coding interview:

- Loop in a linked list

- String breakdown

- Climbing word ladder

- Invert binary tree

- Substring in string

- FizzBuzz

- Targets and vicinities

- Cloud computation

- Github

3. Culture fit

They'll also want to find out who you are and your personality. In preparation, learn as much as possible about their operations, mission, and culture to gauge how well your character would fit their expectations.

If you successfully clear the rounds, congratulations! You'll be taken through the hiring formalities.

Top 11 Databrick Interview Questions and Answers

In addition to thoroughly reviewing these questions and answers, consider practicing with mock interviews, find coding questions online to practice, and study computer science fundamentals.

Here are the answers to 11 of the most common Databricks interview questions.

1. What Exactly is DBU?

A Databricks Unit (DBU) is a standardized unit of processing power used for measurement and pricing on the Databricks Lakehouse Platform. The number of DBUs a workload uses is determined by processing metrics like compute resources spent and the volume of data processed.

2. Explain the Difference Between Azure Databricks and Databricks?

Databricks is a self-sufficient open-source data management platform where data engineers use its collaborative environment for assistance in running data workloads. Its features can be hosted via Microsoft Azure, Amazon AWS, and Google Cloud Platform.

Azure Databricks is the result of Databricks features integration with Azure. Databricks also integrated its features with the AWS platform resulting in AWS Databricks. Azure Databricks delivers more functionality and is more popular. One of the reasons for its widespread use is it uses Azure AD authentication and other valuable Azure services to provide better solutions.

On the other hand, AWS Databricks is a Databricks hosting platform with comparatively less functionality than Azure Databricks.

3. What is Caching and when would you use it?

"Caching" refers to temporary information storage. An example of caching in action is whenever you visit a website frequently. Your browser may load information from the cache rather than the site's server to speed up data retrieval and reduce the server's load.

4. What Are the Different Caching Types?

These are the four main caching types:

- Web page caching

- Data caching

- Distributed caching

- Application or output caching

5. Do Compressed Data Sources Like .csv.gz Get Distributed in Apache Spark?

"Single-Threaded" is the term used when you read a compressed data source organized in serial data. Once this data is read off disk, it stays in memory as a distributed dataset. That means the first read isn't distributed.

It is challenging to break compressed files. But chunkable/readable files are distributed to several extents in an Azure data lake or Hadoop file system.

When many files are chunked up and compressed, a thread per file is created depending on the number of files.

6. Is Text Processing Supported by All Languages? How Are Multiple Languages Affected?

Multiple language support depends on the package. If, for instance, you're using Python with NTLK and Spacey, it will support various languages. And if you're using John Snow Labs with NLP library or Spark with MLLIB, it supports all languages.

7. Can You Use Spark for Streaming Data?

Spark is used to support several streaming processes simultaneously. A core part of Spark functionality allows you to read and write streaming data or multiple deltas.

8. Which ETL Operations Are Performed on Azure Databricks?

These are the ETL operations completed on data in Azure Databricks:

- Data is converted from Databricks to the data warehouse

- Data is loaded via bold storage

- Bold storage is used to store data short-term

9. What is Kafka and its Uses?

Apache Kafka is a streaming platform in Azure Databricks primarily used for constructing stream-adaptive apps and instant streaming data pipelines. Kafka is also used to ingest data from various sources, including logs, sensors, and financial transactions.

Databricks Runtime also uses Apache Kafka connectors for controlled streaming. It also provides message broker functionality, for example, a message queue to accommodate subscription to and publish data streams.

10. What do you know about Databricks secret?

A key-value pair used to protect confidential data is called a "secret." It comprises a unique key name enclosed in a secure environment. Each scope is allowed 1000 secrets, and the secret value must not exceed 128 KB.

11. What Is the Method Used to Create a Databricks Private Access Token?

Here's how to create a Databricks private access token for another Databricks user:

- Go to your Databricks workspace.

- At the top bar, select your Databricks username.

- Choose "User Settings" from the pull down menu.

- Select the "Access Tokens" tab.

- Click on "Generate New Token."

Conclusion

For a successful Databricks interview, prepare using the above pointers. For thorough prep, carry out mock interviews, practice solving coding questions, and review computer science fundamentals. Good Luck!

If you'd like your next job to have a 4 day work week (32hrs) but pay a full time salary, check out our remote jobs.